Phi Hosting, Host Your Phi-4/3/2 with Ollama

Phi is a family of lightweight 3B (Mini) and 14B (Medium) state-of-the-art open models by Microsoft. You can deploy your own Phi-4/3/2 with Ollama.

Choose Your Phi Hosting Plans

Professional GPU VPS - A4000

- 32GB RAM

- 24 CPU Cores

- 320GB SSD

- 300Mbps Unmetered Bandwidth

Express GPU Dedicated Server - P1000

- 32GB RAM

- GPU: Nvidia Quadro P1000

- Eight-Core Xeon E5-2690

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

Basic GPU Dedicated Server - GTX 1650

- 64GB RAM

- GPU: Nvidia GeForce GTX 1650

- Eight-Core Xeon E5-2667v3

- 120GB + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

Basic GPU Dedicated Server - GTX 1660

- 128GB RAM

- GPU: GeForce RTX 3060 Ti

- Dual 12-Core E5-2697v2

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

Advanced GPU Dedicated Server - RTX 3060 Ti

- 128GB RAM

- GPU: GeForce RTX 3060 Ti

- Dual 12-Core E5-2697v2

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

Advanced GPU Dedicated Server - V100

- 128GB RAM

- GPU: Nvidia Quadro RTX A4000

- Dual 12-Core E5-2697v2

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

Enterprise GPU Dedicated Server - RTX 4090

- 256GB RAM

- GPU: GeForce RTX 4090

- Dual 18-Core E5-2697v4

- 240GB SSD + 2TB NVMe + 8TB SATA

- 100Mbps-1Gbps

- OS: Windows / Linux

Enterprise GPU Dedicated Server - A100

- 256GB RAM

- GPU: Nvidia A100

- Dual 18-Core E5-2697v4

- 240GB SSD + 2TB NVMe + 8TB SATA

- 100Mbps-1Gbps

- OS: Windows / Linux

6 Reasons to Choose our GPU Servers for Phi 4/3/2 Hosting

NVIDIA GPU

SSD-Based Drives

Full Root/Admin Access

99.9% Uptime Guarantee

Dedicated IP

24/7/365 Technical Support

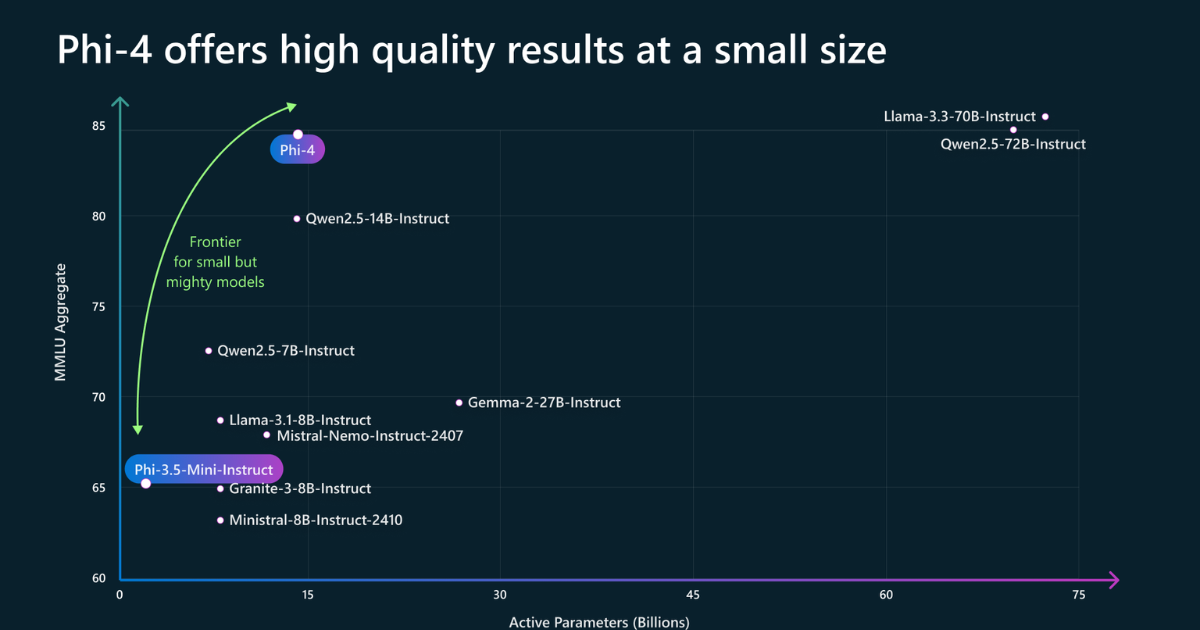

Phi-4 vs Qwen2.5 vs Gemma2 vs Llama3: Benchmark Performance

Key Features of Phi-4

Compact and Efficient

High Performance

Trained on High-Quality Data

Potential for Local AI

How to Run Phi 4/3/2 LLMs with Ollama

Order and Login GPU Server

Download and Install Ollama

Run Phi-4/3/2 with Ollama

Chat with Phi-4/3/2

Sample Command line

# install Ollama on Linux curl -fsSL https://ollama.com/install.sh | sh # on GPU VPS - A4000 16GB, V100 or higher plans, you can run Phi-4 14b ollama run phi4 ollama run phi3:14b # on dedicated server - P1000, GTX 1650 and higher plans, you can run Phi-3 3.8b and Phi-2 2.7b ollama run phi3:3.8b ollama run phi

FAQs of Phi Hosting

What is the Phi series?

The Phi series is a family of small, efficient language models developed by Microsoft, designed to achieve high performance with a relatively small model size compared to models like GPT-4 or Llama 3.

What is Phi-4?

Phi-4 is a 14B parameter, state-of-the-art open model built upon a blend of synthetic datasets, data from filtered public domain websites, and acquired academic books and Q&A datasets. It’s the latest model in the Phi series, expected to improve performance while maintaining efficiency.

Can Phi-4 run on local devices?

What is special about the Phi models?

Efficiency: They deliver strong reasoning, coding, and general understanding with fewer parameters.

Training Data: They use high-quality “textbook-like” datasets rather than just internet-scale crawling.

On-Device Potential: Some versions are small enough to run locally, making them suitable for mobile, IoT, and edge AI applications.